The Future of Artificial Intelligence with Michael Kanaan

In this episode, host Don MacPherson is joined by AI expert, Michael Kanaan. Together, they explore the future of artificial intelligence including how it will be used both at home and work, its impact on the workforce, and the potential for AI to both help and harm us. They also discuss the ways in which AI surveillance is being used to target certain groups around the globe and the ethical questions that arise from creating AI algorithms.

Michael Kanaan is the author of T-Minus AI and was the first chairperson of artificial intelligence for the U.S. Air Force, Headquarters Pentagon. In that role, he authored and guided the research, development, and implementation strategies for AI technology and machine learning activities across its global operations. He is currently the Director of Operations for Air Force / MIT Artificial Intelligence.

Don MacPherson: [00:00:00] Hello, this is Don MacPherson, your host of 12 Geniuses. I have the incredible job of interviewing geniuses from around the world about the trends shaping the way we live and work. Today, we will explore the future of artificial intelligence. Our guest is Mike Kanaan. He is the author of the book T- Minus AI and Director of Operations for the partnership between the US Air Force and MIT.

We discuss how AI will change every job and nearly every aspect of our lives. Mike argues that AI is not to be feared. He says that it can be an incredibly powerful tool for doing mundane tasks while allowing people to focus on more human, high-value activities. We also discuss how AI is being used in China and potential pitfalls if AI is not used in an ethical manner.

This episode of 12 Geniuses is brought to you by the think2perform RESEARCH INSTITUTE, an organization committed to advancing moral, purposeful, and emotionally intelligent leadership.

Mike, welcome to 12 Geniuses.

Michael Kanaan: [00:01:13] Thanks, Don. It's a pleasure being on the show with you today.

Don MacPherson: [00:01:15] Why don't we start with your background? Could you talk about your military service and what inspired you to write T-Minus AI?

Michael Kanaan: [00:01:25] Well, I began my career after graduating the US Air Force Academy as an intelligence officer at the National Air and Space Intelligence Center. This was my first job and it was commanding a new mission. We called it ACEs high. It is an acronym for the airborne queuing and exploitation system hyperspectral. It was the first of its kind. And we are mere months out from flying our first missions in the Middle East. A hyperspectral sensor works much like our eyes do except better. You and I see in three color bands, but a hyperspectral imager, it sees in hundreds.

And when we look across these color bands during the day, of course, because while sunlight, we can identify certain materials, spectrally significant in the spectrum of which it collects. Long story short, it was this which initially led to my passion for AI. It was 2011. What was happening then? Image net. Which was a large visual database designed for use in visual object recognition software research. And back then it achieved a major milestone by performing largely commensurate to human performance.

And also this brought AI out of its last winter. When you think about it, we were collecting this massive data cube that somehow we need to send back via satellite data to be processed locally in the States. All while live flying in the Middle East with only about 16 of us there flying the plane, moving the camera and doing all the analysis and near real-time to at a moment's notice, let a sailor, soldier, airman, Marine on the ground know, hey, there's something you need to be concerned about. And sometimes it was literally turns away, seconds, minutes from, for instance, the convoy on the road. Because again, we could definitively detect and see what the human eye couldn't, such as explosive material. This was a big deal and for those of us who know how to frame an AI problem, it was perfect.

That began a groundswell because I wasn't the only one wondering how much quicker could we have told someone? What could we have done better? How could we make smarter, righter choices? And even without it, we took 37 tonnes of already weaponized, explosive material off the battlefield and were directly recognized as saving 2,500 lives at very timely and critical points of discovery. And despite any subsequent professional accolade or even recent personal accomplishment with the book, it's the women and men of, and on this mission that I'm most proud of to this day and I don't think it'll ever change.

And then from there, moved on to be the Director of Target Development at the Counter ISIS Operation Inherent Resolve campaign. And then from there to the Pentagon co-chairing AI for headquarters, Air Force. Now here standing up a new important partnership between the Department of the Air Force and MIT.

Don MacPherson: [00:04:19] And when did that start, this partnership with MIT?

Michael Kanaan: [00:04:23] In earnest of January of 2020. It is pursuant to a cooperative agreement between the Department of the Air Force, MIT Computer Science and AI Lab, and of course, MIT Lincoln Laboratory, to drive three key points.

One, to lead flagship developments and programs on behalf of the Department of the Air Force, developing AI for a public good. The second is creating scalable AI education for the department. And the third is to reinvigorate that triangular important relationship on the topic of AI to lead in ethics, morality, and safety. And use that in line with our visions of human dignity for the country.

Don MacPherson: [00:05:07] Talk about what your definition of artificial intelligence is because this show is going to be the future of artificial intelligence. So how do you define AI?

Michael Kanaan: [00:05:18] Ooh, the penultimate question. I'm a fan of starting with analogies and if I could pick up an electrical outlet near me now, or the microwave in the kitchen, I'd bring it over, or maybe I'd bring over a hammer.

And for most of us, hopefully all of us, we probably have one of these things I've mentioned in our house. We're not electricians, but we use electricity. We're not engineers, but we use microwaves. Many of us drive cars, but fewer can build and fewer can both code and build the software in our cars we have today. And all of us use hammers without being master craftspeople.

While we may not understand each of these things intimately, they're familiar. And with each of these everyday instruments, we can be incredibly effective with them yet we can also do damage with them intended, or through unintended consequences.

An AI itself is a lot like that in certain ways. Here's what AI is. Think of it as a flashlight. It is a mirror and/or our canary in the coal mine. It illuminates otherwise ignored or undiscovered latent patterns in the data that we memorialize in the world around us. Once upon a time, when it came to interacting with our machines, our data, we had to explicitly code its input with a semblance of an output in mind.

But because of some advances, primarily in computer architecture, software programming, languages and algorithms, we no longer need to hardcode this reality — which is really problem, frame and communicate with our machines — in quite the same manner. AI applications are just designed to analyze data and formulate predictions without any overall guidance from us. That's it.

Don MacPherson: [00:07:07] One of the misconceptions is that AI is going to take over the world and human beings need to be afraid of AI because it will control and manipulate us.

Michael Kanaan: [00:07:18] I think whether or not you're well-versed and a professional in this space, or just living everyday life that you, to a certain degree, feel that way about this technology. And if we can help educate and inform people to say, well, that's not really the case.

If we ever even get there, which I highly doubt, I'm willing to say that out into the world, for myself when there's a fire at the door. I'm not really worried about the lighting and existence. The current state of artificial intelligence will profoundly impact us for decades to come if nothing were to change.

Don MacPherson: [00:07:56] When you think back to 2011, that story that you shared earlier, and think about that moment and the end of 2020, which is when we're recording this, how has artificial intelligence changed human life during that period?

Michael Kanaan: [00:08:13] Well, what I think you're driving at is it's all around us and it characterizes. So...

Don MacPherson: [00:08:19] That's exactly, that's exactly what I'm getting at because most of us interact with it every day. And multiple times a day. And we're not even aware of it.

Michael Kanaan: [00:08:30] Well, I really like this idea of separating the words, "character" and "nature" of something. The nature of everything going on is the same. The character is what changes. So at least under the hood, it's all around us, but despite everything at risk and all the conversations... and the fact, again, it's the reason you see the ads you see every day, the news you read, often the music you listen to. It writes nowadays and even makes art.

But what it's really changed and what's different, is once upon a time in the industrial age, we had to trade off between products and services — or just anything in our lives — that something we had was either better, faster, or cheaper than something else we had.

Then some competitor in business, or you personally, would fill the void with the other missing part of those three points. Because you could have two, but not all three. But in the digital age, that's not the case. You can be all three at once. So it largely changed these aspects and it also gave the rise to these massive corporations or just a singular device you have like a computer or a phone, that you can do so much with now.

Alternatively, it's the reason you see what you see, which is different than what I see. I think a really telling moment for people to practice where it really hits home is to call up a friend and do a Google search. And once you likely find is you get something wildly different than the other person. That is, your top return on my page is page two and vice versa.

So truth, or our truths, get obfuscated quite quickly. And extend that to news and misinformation, disinformation we read… you can see how this plays out, literally everywhere. And sometimes makes it tough to be human right now.

Don MacPherson: [00:10:24] We are releasing this episode in 2021. What does AI look like in 2031?

Michael Kanaan: [00:10:32] We want the minutia out of our lives. We as humans are doing far too many non-human things. So what I'm hopeful for artificial intelligence is that it means we humans get moved elsewhere. And I'm hopeful to this point that it's more human experiences and jobs. In this respect, done right, I'm quite bullish on the future of the workforce and us as humans more generally. Like sort of the Athenian model of us being thinkers, putting our machines to work. Again, provided we do it right. Which I'm not so bullish on to the education problem.

Maybe a world comes about where we pay teachers more, reward stay-at-home parents, pay artists, the human spirit stuff. And as for AI's role in that, we often conflict and combine the words, automation with artificial intelligence. Automation may perhaps replace the quote, "line worker" and for what it's worth, if automation certainly sometimes driven by AI, if it can replace someone's job, they shouldn't be doing that job in the first place.

So I hope it gets to humans doing some more human things. That's a good goal to have. But how do we shift the narrative and provide a different value proposition? What I'm hopeful for is this rise of the idea, which is probably not totally reflected in quarterly earnings reports — despite what you might hear from organizations. I want to commend all the work that's being done — is that we can do well by doing right and doing good.

So where I think we're getting it right and positive in the future, to get us to what I've described, is where we make mistakes. Microsoft's chatbot Tay that they took down, Amazon changing its hiring practices because a machine learning algorithm illuminated biases we all carry, John Oliver having a bit about Uyghur China just a few weeks ago. It's the conversation in Western like-minded nations saying, “how do we think about a fair and representative collection of data to build the algorithms that affect us all?” Another one is the slow phase rollout of OpenAI's GPT-3, the standup of ethics boards, the creation of a national security commission on AI. I think we're getting it right in those spaces and making sure that it represents who we are.

I recently did a hackathon for the city of Detroit — one of those kinds of barn-burner, not-a-lot-of-sleep weekends for 70 hours, helping address the fact that 40% of the city — and this goes across the country—doesn't have internet access. That's not just Detroit, it's our rural communities, too.

If we're going to shift the narrative to this positive place that we're talking about, then I think we can start through this and making sure that the technology represents us. If you are not characterized in the data, you are an anomaly and it will not serve you. I don't want an Alexa in my home. Only trained on data from Southern white gentlemen or just people in Northern California.

Don MacPherson: [00:13:44] When you said at the very beginning, we are doing so many or too many non-human things. Can you just give a couple of examples of the types of non-human things that we are doing?

Michael Kanaan: [00:13:54] A young attorney out of a great law school looking just for case law and precedent through 10 million pages. Perhaps a worker just doing financial data on Excel files that they cannot possibly as a human consume all of. I think a lot of those non-human aspects where we're interacting with too much data and not being creative in what we do. Creativity comes from us. Driving out the minutia we can do in each of our businesses.

Don MacPherson: [00:14:30] Yeah. Those are really good examples. So using a query to quickly get access to information, and then allowing that attorney to do more higher-value work. Is that an accurate way of summarizing that?

Michael Kanaan: [00:14:44] I think it's value at the end of the day. What is the human doing? And frankly, for your personal businesses or companies, you're not getting value out of the human doing those things. For example, the Stanford law grad essentially just looking for precedent. Our machines can tell us what the precedent is. Right? Let's develop them more.

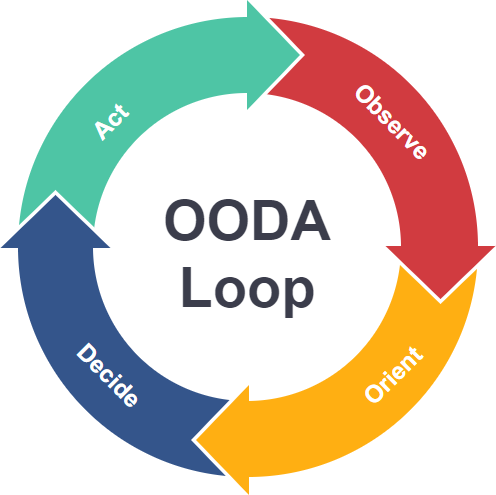

Have you ever heard of the OODA loop?

Don MacPherson: [00:15:10] I have not, no.

Michael Kanaan: [00:15:12] The OODA loop is from a former Air Force officer. I was really excited on a trip to a VC firm. They sat us down, they said, "do you know about the OODA loop?" And I said, well, that's what we do in the Department of the Air Force, that's where it comes from.

The idea is this, in a circular motion, people who are in business, you observe, orient, decide, act. And the goal is to compress that loop as much as you can for the machines along the observe and orient paths. I think we can use our machines to do that faster and leave us to the decide and act portion. Because that's what we're good at. And if we keep that in mind, you start in your businesses and personal lives saying, what can I not observe and orient myself to so I'm only in the domain of deciding and acting upon it.

Don MacPherson: [00:16:07] Another thing that I wanted to ask you about is, and I found this remarkable in the book, I think I had heard of Microsoft Tay but in your book, you devote some space to it, and then I actually looked it up afterward. Could you just quickly describe what Tay was, what the intention was, and then what the results were.

Michael Kanaan: [00:16:28] Of course. One of the most unfortunate uses, well, I wouldn't even call it unfortunate, was using an AI chatbot named Tay, which is an acronym for thinking about you. It was created by Microsoft and was launched on Twitter back in 2016. And it's an algorithm that was designed to portray the language patterns of a 19-year-old American girl, as it engaged and interacted with other users on Twitter's platform.

And its first tweet was of course cheery and inoffensive, you know, "hello world!" You know, the first thing you do in coding is do the "hello world," and then it was followed by, "can I just say I'm stoked to meet you? Humans are really cool." And although it was a friendly start, things deteriorated rather quickly because much like I mentioned, machine learning applications learn from the data we memorialize.

Twitter's a pretty dark place. Very quickly, the tweets back to Tay from humans were filled with intentionally racial and sexually biased slurs and no AI is there necessarily to say, well, how does this align with values? It just mimics what it saw or the examples of data that it sees. And only in a number of hours, what it ended up doing was putting out frighteningly prejudiced and outspoken racial and xenophobic slurs of every kind imaginable. And for anyone like yourself, you can go Google them and see what it was.

But interestingly enough, what Microsoft did is shut that down right away. And what I think is there's something good about that. When I mentioned, how can we think about artificial intelligence and why would we embrace these things? It's our canary in the coal mine. It was just a mirror on exactly who we were in that scenario.

So privacy and anonymity are a problem that we need to work out. And that plays itself out to the point of AI, of computer-generated content and us asking the question. What is real? And I think that question will underpin many of the issues we'll face for a generation to come.

Don MacPherson: [00:18:41] So getting back to 2031. And what AI looks like you were going to get into what it could look like from a negative perspective. So you gave us the positive outlook. What's the negative outlook?

Michael Kanaan: [00:18:53] Well, all of this technology is inherently dual-use to the point that again, it's the purposes to which we put our machine. Here's a great example. We all hear about deep fakes. They're relatively fun technology. They're also scary because it creates misinformation and disinformation and fixed videos and the like.

It's also the same technology, the underpinning techniques, that are the best detector of breast cancer in mammograms as we speak. So development, public development, which the commercial AI and R&D world has looked at as an obligation, which by the way is right in most circumstances, simultaneously advances negative applications of it.

How do we have that conversation? Think about this. The leading machine learning developments, as we speak now, openly and publicly, are reinforcement learning algorithms. And they're literally done on a war game — StarCraft 2 and Dota in particular.

We have to have a question of, well, do we control that? Again, what it really illuminates is why it's so important for us to make sure that we are telling the right narrative and holding our value proposition. Which is a way to undo this whole, "well, I like the capability of a Huawei phone, so I'm going to use it," or "I like the capability of TikTok, I'm going to use that." But think about the arguments you would have to make to someone to say you shouldn't use TikTok because you know that bunny face you make on your TikTok? Well, that's training an artificial intelligence algorithm to be more performant, using your data, provided back to the Chinese Communist Party to better use it for digital authoritarianism crackdown on weaker minorities.

What a gnarly argument to make. It's correct. But we got a journey ourselves along the way. So in the short term, trading off capability or trading off what we like that are hidden beneath a lot of layers that aren't in line with what you probably view the world— Western, freedoms, and democracies.

Well, I hope we don't make all of those trade-offs now. And we live in a world where these repressive technologies dominate 60% of market share, as opposed to, they are going to exist it is the way that it is, but we maintain then it's at that 20% range globally.

I think what the future looks like... well, you can understand how those underlying biases and ways in which the world is viewed or these computer vision algorithms used for oppressive purposes could put us into a pigeon hole eventually. And again, it starts with education broadly to tell that narrative. So we move down a path that we still have the moral high ground.

Don MacPherson: [00:21:59] My guess is that AI is going to fundamentally change just about every single job on the planet. Would you agree with that?

Michael Kanaan: [00:22:09] I would agree that there is no job on the planet that AI doesn't have its rightful place to do the job better. But with that being said, it's not the answer to everything. It's not a miracle whip at the end of the day.

Don MacPherson: [00:22:26] And what about job elimination? Complete job elimination. What jobs will be eliminated?

Michael Kanaan: [00:22:33] We have a lot of tasks that we deem as jobs right now. Like literally that's the job. But we should reframe to say no, no, no, that's a task. Is it right for a machine and or not? I hope that what we do is build enough pivot space to be thoughtful about how to interact in a world where maybe a job has disappeared. For instance tax preparation. A significant job that was something you went to college for that earns you upper-middle-class wages and then in swept Turbotax. And outside of complicated tax returns, largely for most people, they don't go to an accountant for that it's just done.

And all of those people were displaced. The question we should ask is whether or not we know what job it is, are we doing right by those people after they were displaced? All that money just went to Turbotax and it completely changed the landscape of being an accountant. How do we work in the middle? If a job is replaced, we understand a singular technology created for-profit purposes, undid an entire market. Let's have a conversation about what we do right by those people.

So I'm not quite sure, but I think about jobs in the future we don't think about now, AI ethicists 30-40 years ago we would have never thought about. I hope we do the same thing here. Just make sure that we educate people and provide them with space to ,if their job is compromised, maneuver them elsewhere. Slowly burn down what we know will be compromised.

Don MacPherson: [00:24:11] Yeah, you're hitting on a point that I talk about a lot, which is from a leadership perspective, from an organization perspective, we need to identify jobs that are threatened people in those positions who are threatened, retool them, educate them, let them know three years in advance, not three months in advance.

Your job is going to be fundamentally different than it is right now. And here's a pathway for a job that you can flourish in and let's move down that path together. I think there is an incredible responsibility that both leaders and organizations have in order to do that because this is probably the biggest worry that I have. Because if you don't do that, Mike, then the person who's eliminated, they go down the path of, "Oh my gosh, I just lost a good-paying job, my skills are no longer valued in the marketplace. I gonna lose my livelihood. My family's going to be hurt. I'm depressed. Now I'm going to turn to alcohol or drugs," and then that's just a horrible path to go down.

That's my fear with this is that. We don't necessarily have leaders in our organizations who are prepared to do this or organizations that are forward-thinking like that. And I do believe you need to think out three years and not three months or six months or nine months.

Michael Kanaan: [00:25:29] Well think in a contemporary standpoint, how poorly we've done this. The coal worker analogy. We said we will drive you out of your business. And coal workers said, "well, I'm not going to all of a sudden learn to code." Well, hold on a second. Maybe you could. But let's find something different. Or truck drivers, same thing. "Well, I guess artificial intelligence and automation are going to upend me. And why would I be valuable?" Well, there are other things that we could figure out about that business and supply chain management and the, like, we owe that conversation now.

And it's tough in the short term, again, that if my pressure is to make profit now and to return to my shareholders and the like, it's hard to have a three-year out conversation to say, well, you know what? This decision that I made, the money, I just expended to retool and retrain someone that'll pay itself out in dividends, you know, three, four, or five years down the road. If we can generate this society where the value is doing good. Then I think we can start to have the conversation you just illuminated.

Don MacPherson: [00:26:36] You devote a whole chapter in your book on China. Could you talk about how AI is being used in China and are they the supreme country when it comes to AI usage?

Michael Kanaan: [00:26:49] Yes and no. So as for China, a lot of people think of them as some backwater nation. I don't think we quite comprehend... and by the way, I do want to be precise with my language here. The Chinese communist party does bad with AI. China, Chinese citizens do not. I think we're all well-served to be precise with that language.

But we think of it as a backwater nation. As opposed to how digitally advanced they are through experience and through top-down directions to digitize all of their interactions and what they do. And while their lives or the pictures we see are utter atrocities and wholly unacceptable to have, you know, 20,000 people living in a building with no more space than the island in my kitchen, it's mutually exclusive of the fact that their lives are better because of digital applications, such as We chat. They're quite effective.

So broadly, China's a major driver of AI surveillance worldwide. Technology linked to Chinese companies, particularly Huawei, Hikvision, CTE. They supply technology as we speak to 63 countries and 36 of them have signed on to China's Belt and Road Initiative.

And Huawei is the big responsible agent for much of this. No country, no company comes close. And Chinese communist party product pitches come with loans that push people to, of course, buy more of their equipment. And this is particularly clear in countries like Kenya, Zimbabwe, Ecuador, where Chinese models are gaining experience and they're exporting this, so they're better and effective users of the technology. But the concern is about how much of these incentives come with the acceptance of repressive technology.

In Africa alone, China is its largest funder of infrastructure. And that number is somewhere in the park of $150 billion. And of course, I can understand taking that loan when you don't have an alternative. The question is what does that come with? When, for instance, in Kenya, 20 some odd percent of its external debt is to China, what happens then? And how does that story play out when it's the case such as they need 5 billion more dollars right now to finish a cross-country train track for their logistics network. But China's holding that to accept more of its goal of exporting AI tools to gain influence with these repressive technologies.

So it means that all of the advancements in China have a circular effect of informing the communist party and gaining further experience and exportation through implementation. And it plays out in everyday life right now to a significant degree. A fact like the crackdown on Uyghurs, through AI such as computer vision, and further development of social credit systems and internal monitoring platforms.

So in the book, what I try to do is tear down the red wall and talk about some realities that we don't often see of just how effective they are with the use of this technology. When for every three people in China, a country with a billion people, there's a camera for them, monitoring them. Or the fact that you and I on our phones, we have uber eats Postmates, right? Or GrubHub. They have one application. If you want to have a private conversation with a family member, or you want to order a dog walker, or you just simply want to spend money on food, you have to use the same application. So digital authoritarianism in the short term is incredibly effective because they use the technologies and they get good at using the technologies.

The question in the longterm is, how does that play out when we start paying attention to the value proposition and how it aligns with our views of the world.

Don MacPherson: [00:30:56] In the book, you do talk about China's belt and road initiative. And in the margin, I said, this is like arming other countries during the cold war. So essentially what the Soviet Union had done to spread their way of life and their government system. Do you see the same parallel there with the belt and road initiative?

Michael Kanaan: [00:31:18] A significant parallel. We're to this point of, the nature of it is not different. But the character is. And without being informed about how it all plays out and how it could pan out 26, 30 years from now— you're talking about a society that has thousands of years of history, right? Thousands. We have a couple hundred.

So the world is seen in a little bit of a different way in long-term strategy and what strategic gain ends up looking like. But there is no debate that the aims or the views of the things we were fundamentally built on, the idea of human dignity, is wildly different between these two cultures.

Don MacPherson: [00:32:09] There are some existential threats that human beings face. So what can AI do to solve some of these existential threats and in specifically climate change, if you've thought about that?

Michael Kanaan: [00:32:22] It can do a lot. Here at the Air Force accelerator at MIT, what we're developing is AI for a public good. So weather matters to the department of the air force for obvious reasons and a lot of different ways. In fact, we provide much of weather collection, to the rest of the world, so we can better model what's going on. So modeling and simulation is a really special place that AI has a role to play.

So when we think about global warming and the threat of climate change, which is real by the way, which is a national security issue, what we can do is how can we better model and inform our solutions. Or illuminate some patterns we perhaps have not seen. For instance, icebergs melting over the polar ice caps.

Well, from a national security person, active, I care about that because you know, the world is circular. So it gives inroads automatically to perhaps adversary nations to have access into Northern Canada and vis-a-vis down into to the United States. So one of the projects we work on is taking whether surveillance from areas around a locale where perhaps we haven't invested, or it's too hard to maintain, like in the North pole or Antarctica, a weather surveillance or a weather radar station.

Well, how can we use artificial intelligence to simulate the existence of a ground weather station or predict flooding that we might not have seen based on elevation data, and then in relation to historical weather patterns so that when there will be flooding, we can say this area might be flooded to a certain degree. Or this is where ice caps are melting.

I think it has a significant role in what we deem as modeling and simulation. So we as humans, aren't waiting for the problem to encounter us. We're addressing the problem early, and that's a much in the way AI is certainly driven a lot for our COVID response, whether that's predicting where areas are going to need beds, or perhaps, national guard attention and the like, or just logistics and materials. We can use AI to say during the surge, this is going to be a problem with the pandemic or alternatively, we can simulate a whole bunch of viral responses or how we create a vaccine in a digitally safe space.

Don MacPherson: [00:34:55] I talk about AI and biotechnology and quantum computing as faith benders. So these three technologies. I think about how AI is going to change what it means to be human. And the question I have for you is— and I know you're a science fiction fan— the question is, is humanity on the precipice of evolving into something else?

Michael Kanaan: [00:35:17] How about I start with a science fiction writer then? Arthur C. Clark. And he has three adages or his three laws as it's commonly known. And they're pretty cited and the first is when a distinguished, but elderly scientist states that something is possible — by the way, this was written back in the sixties of course, so the law itself uses the pronoun he and it should be they — are almost certainly right. But when they state that something is impossible, they're very, probably wrong.

The second is the only way of discovering the limits of the possible is to venture a little way past them into the impossible. And here's the third point to what you bring up. Any sufficiently advanced technology is indistinguishable from magic. There's where you talk about the faith bending. But it doesn't need to be a conversation of whether faith is right or wrong. It's a conversation about growth and perhaps how to understand us better as humans, and then furthermore, to maybe bolster our faith.

And it gets to this point of when it comes to technology, whatever has granted this conscious being, that we have the ability to create... which is really what makes humans special, right? Everyone says, well, we use fire and it's like, well, there's a lot of studies that crows use fire to burn their nest.

What it really is is we create things that would not exist in nature on their own. For instance, aspirin. That's what makes us special. So whether some creator has endowed us with this, whether or not it just comes from the current of our biological selves and the electrical impulses, we can do that.

And what it says to us is let's learn more, let's grow and create because on either side of the equation of being very faith-driven, or not, we become better for it. So that's why I would bring this up and again, any good technology, seems like magic.

Don MacPherson: [00:37:30] Where can people learn more about you and your book?

Michael Kanaan: [00:37:34] The book itself is available on all major platforms. Amazon, Barnes and Noble, and the like, or more importantly, in your local bookstore. Local bookstores matter to local communities and they are having a hard time. So anytime you can support a local bookstore, I would urge people to do that.

And then on Twitter, Instagram, Facebook, LinkedIn, it's @MichaelJKanaan.

Don MacPherson: [00:38:00] Mike. I really appreciate your time today. Thank you for it. And thank you for being a genius.

Thank you for listening to 12 Geniuses and thank you to our sponsor, the think2perform RESEARCH INSTITUTE.

Our next episode will explore the future of cities. Our guest is New York City Commissioner, Penny Abeywardena. That episode will be released March 9th, 2021.

Thank you to our producer, Devon McGrath, and our research and historical consultant, Brian Bierbaum. If you love this podcast, please let us know by subscribing and leaving us a review on iTunes or your favorite podcast app. To subscribe, please go to 12geniuses.com.

Thanks for listening, and thank you for being a genius.

[END]